The research activities of the group are supported by an important computing infrastructure, which has been deployed and evolved for almost 20 years by members of the group.

Our first steps were carried out “share-memory” cc-NUMA supercomputers, such as SGI Power Challenge,

SGI Origin 3000 & SGI Altix series servers mainly.

Since then, our computing resources has focus about distributed systems, as cluster HPC/HTC systems.

All our actual infrastructure is installed and on running (active status) in 3Mares Data Center, one of the main computing infrastructures centers in the University of Cantabria, especially about HP/HT computing. This Data Center was built in 2010 and is fully operative since then.

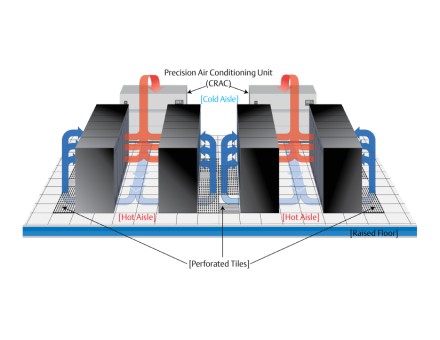

For the correct and safe running of the computing and networking equipment, that center has the following systems:

- Efficient cooling system

- Central and distributed UPS systems

- Smart power control systems

- Smart environment control system

- Fire prevention system

- Access control system

CLUSTER Docs

Cluster user’s Manuals

OpenGE (SGE EE)

Development

CLUSTER HPC Monitor

Ganglia monitoring system (public side)

CLUSTER HPC Sumary

Running nodes

# g1/g2

Model: HP Proliant DL 145 2º Generación bi-procesador

Processor: 2 AMD Opteron 275 dual core

Memory: 8 GB

Hard Disk: 80 GB SATA

Network: 2 Gigabit Ethernet

Nodes: 20

Total Cores: 40

- Out of service. Part of them are re-used to development tasks.

# g3 (gslow)

Model: HP Proliant DL 160 5ª Generación

Processor: 2 INTEL Xeon 5472 quad core

Memory: 16 GB

Hard Disk: 160 GB SATA

Network: 2 Gigabit Ethernet

Nodes: 16

Total Cores: 128

# g6

Enclosure: 5 SUPERMICRO SuperServer 6026TT-HT

Model: 4 Super X8DTT-HF+

Processor: 2 INTEL Xeon X5650 six-core (HT)

Memory: 24/48 GB

Hard Disk: 250 GB SATA

Network: 2 Gigabit Ethernet

Nodes: 20

Total Cores: 480 (HT)

# g7

Enclosure: 5 SUPERMICRO SuperServer 6026TT-HT

Model: 4 Super X8DTT-HF+

Processor: 2 INTEL Xeon E5645 six-core (HT)

Memory: 48/54 GB

Hard Disk: 250 GB SAS

Network: 2 Gigabit Ethernet

Nodes: 20

Total Cores: 480 (HT)

# g8 (gfast/ghuge)

Enclosure: 5 SUPERMICRO SuperServer 6029TR-HTR Intel(R) Xeon(R) Silver 4216 CPU @ 2.10GHz

Model: 4 Super X11DPT-L

Processor: 2 INTEL Xeon CASCADE LAKE Silver 4216 16-cores (HT)

Memory: 128 GB

Hard Disk: 250 GB SSD

Network: 2 Gigabit Ethernet

Nodes: 8

Total Cores: 512 (HT)

# fe-ts

Model: Supermicro SYS-6016GT-TF-TC2

Processor: 2 INTEL Xeon 5504 quad core

GPUs: 2x Nvidia Tesla C1060 GPU Cards

Memory: 10 GB

Hard Disk: 250 GB SAS

Network: 2 Gigabit Ethernet

Nodes: 1

Total Cores: 8 (HT)

# Sun T2

Model: Sun UltraSPRAC T5120

Processor: 1 Sun Niagara

Memory: 4 GB

Hard Disk: 4x 146 GB SAS

Network: 4 Gigabit Ethernet

Nodes: 1

Total Cores: 4 (8 threads concurrently per core)

# Sun T1

Model: Sun UltraSPRAC T1

Processor: 1 Sun Niagara

Memory: 4 GB

Hard Disk: 4x 146 GB SAS

Network: 4 Gigabit Ethernet

Nodes: 1

Total Cores: 4 (8 threads concurrently per core)

# GPUs node

Model: SUPERMICRO Superserver AS-4023S-TRT

Mother board: H11DSi-NT

Processor: 2 AMD EPYC 7281 16-Core Processor

Memory: 148 GB

Hard Disk: 1x 250 GB SAS

Network: 4 Gigabit Ethernet

Nodes: 1

Total Cores: 32

GPU cards: 1x Nvidia QUADRO RTX 40000

1x AMD RX 580 AURUS 8G

Compilation nodes

# XEN Container

Model: HP Proliant DL 160 5ª Generación

Processor: 2 INTEL Xeon 5472 quad core

Memory: 16 GB

Hard Disk: 160 GB SATA

Network: 2 Gigabit Ethernet

Nodes: 2

Total Cores: 16

- Both XEN domains running on these XEN servers each other.

Frontend nodes

# XEN Container

Model: HP Proliant DL 170h 6ª Generación

Processor: 2 INTEL Xeon X5550 quad core

Memory: 24 GB

Hard Disk: 160 GB SATA

Network: 2 Gigabit Ethernet

Nodes: 6

Total Cores: 32

- Both XEN domains running on these XEN servers each other.

TOTAL Cores: ~ 1200

TOTAL Cores (HT): ~ 1600

TOTAL Memory: ~ 8 TB

TOTAL Hard Disk: ~ 160 TB

TOTAL Nodes: 120

STORE Systems

AFS

# MSA20

Model: HP MSA20 backplane

Driver: HP SmartArray 6404 UltraSCSI

Hard Disk: 12x 500 GB SATA

Connections: UltraSCSI

Security: RAID 6

# MSA70

Model: HP MSA70 backplane

Driver: HP SmartArray P800 UltraSCSI

Hard Disk: 25x 300 GB SAS

Connections: SAS

Security: RAID 6

# SUPERMICRO 1

Enclosure: SuperMicro Chassis SC847E backplane

Processor: 2x Inter SANDY BRIDGE 6C E5-2620 2.0G

Memory: 32 GB

Driver: Expander supports SAS2 - 6Gb/s

Hard Disk: 36x 1TB SAS

Connections: PCI

Network: 10G PCI-E

Security (Store): RAID Z (ZFS software)

Lustre

# SUPERMICRO 2

Enclosure: SuperMicro Chassis SC847E backplane

Processor: 2x Inter SANDY BRIDGE 6C E5-2620 2.0G

Memory: 32 GB

Driver: Expander supports SAS2 - 6Gb/s

Hard Disk: 36x 1TB SAS

Connections: PCI

Network: 10G PCI-E

Security (Store): RAID Z (ZFS software)

SERVICES nodes

XEN domains mainly

1x “enclosure” HP DL1000

--> 4x nodos HP Proliant DL 170h 6ª Generación biprocesador con INTEL Xeon X5550 quad core.

4x nodos HP Proliant DL 160 5ª Generación bi-procesador con INTEL Xeon 5472 quad core.

8x Xen domains in charge of managing several service roles:

--> Web services

--> Monitor services (Ganglia/Nagios3)

--> Network services (DHCP/DNS)

--> Imgenes (servers) and cloning services

--> GitLab repository

--> Docker hub repository

--> Docker master (virtual node)

--> Kubernetes master (kubes cluster)

--> Software floated licenses

--> Information services (Kerberos/LDAP)

--> Frontend nodes for the HPC cluster calderon

--> Compilation nodes for the HPC cluster calderon

--> Load balancer (ssh connections) for the HPC cluster calderon

--> Job manager/schedeler (OpenGE) for the HPC cluster calderon

NETWORKING

Ethetnet network based in star topology and dual 10Gb port

Infiniband network for only HPC jobs, implamented by Mellanox switch with 24 dual 10 Gb ports